Publisher-Subscriber Pattern - Decoupling Services Through Messaging

-

Ahmed Muhi

Ahmed Muhi - 24 Sep, 2023

Introduction: The Tight Coupling Problem

You’ve split your monolith into services. Now you’re about to discover the #1 problem everyone hits: tight coupling.

Your order service is the heart of your e-commerce application. When a customer places an order, it needs to call the inventory service to reduce stock, the shipping service to create a shipment, the notification service to email the customer, the analytics service to track metrics, and the payment service to process the transaction.

Each call is synchronous - your order service waits for a response from each one before moving to the next. This works fine at first. Then reality hits.

Here’s what breaks:

The shipping service gets slow because their database is under load. Now every order takes 30 seconds to complete because your order service waits for shipping to respond. Orders that don’t even need shipping yet are stuck waiting. Your entire checkout grinds to a halt because one downstream service is struggling.

Deployment becomes a coordination nightmare. The shipping team deploys a new version of their API that changes the response format. Your order service breaks. Now deployments require coordination meetings, careful timing, and crossed fingers. One team can’t move without checking with five others first.

This is tight coupling, and it doesn’t scale. Your services are so intertwined that you can’t deploy them independently, can’t add features without touching multiple codebases, and can’t isolate failures. What started as a simple, logical architecture has become a deployment bottleneck and a maintenance nightmare.

But there’s a better way.

The Publisher-Subscriber (Pub/Sub) pattern exists to solve this.

Instead of services calling each other directly, they communicate through a message broker. The order service publishes an “OrderPlaced” event and moves on. Interested services - inventory, shipping, notifications, analytics - subscribe to that event and process it independently. Services stay decoupled, failures stay isolated, and teams can deploy without coordination.

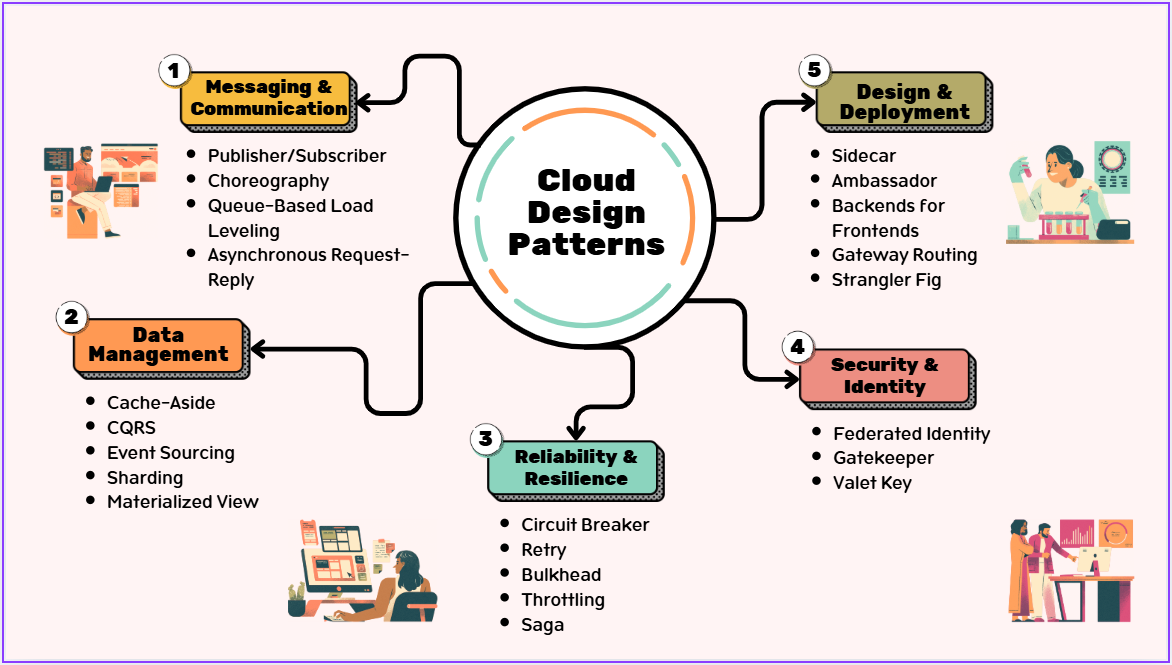

This is Part 2 of our cloud-native patterns series. In Part 1, we explored why these patterns exist and the reality of building distributed systems. Now we’re solving the first problem: tight coupling.

In this article, you’ll learn:

- How Pub/Sub decouples services through asynchronous messaging (with concrete examples)

- Which Azure service to choose: Service Bus, Event Grid, or Event Hubs

- The operational trade-offs: message delivery guarantees, idempotency, eventual consistency

- When Pub/Sub is the right choice (and when it’s not)

Understanding these trade-offs is crucial. Pub/Sub is powerful, but it introduces complexity that direct calls don’t have. Let’s explore when that trade-off makes sense and how to navigate it.

About This Series

This is Part 2 in a series on cloud-native architecture patterns. In Part 1, we explored why cloud-native patterns exist and the reality of distributed systems. Now we’re diving into the first pattern: Pub/Sub.

In this series:

- Introduction to Cloud-Native Patterns

- Publisher-Subscriber Pattern (Pub/Sub) ← You are here

- Saga Pattern (Distributed Transactions)

- Circuit Breaker Pattern

- API Gateway Pattern

- CQRS Pattern

- Event Sourcing

- And more as we go…

Each article builds on the previous one, but you can jump in anywhere. If you haven’t read Part 1, you might want to start there to understand the bigger picture.

What is the Publisher-Subscriber Pattern?

Remember the coordination nightmare? Six teams can’t deploy without checking with each other first. One slow service brings down your entire checkout.

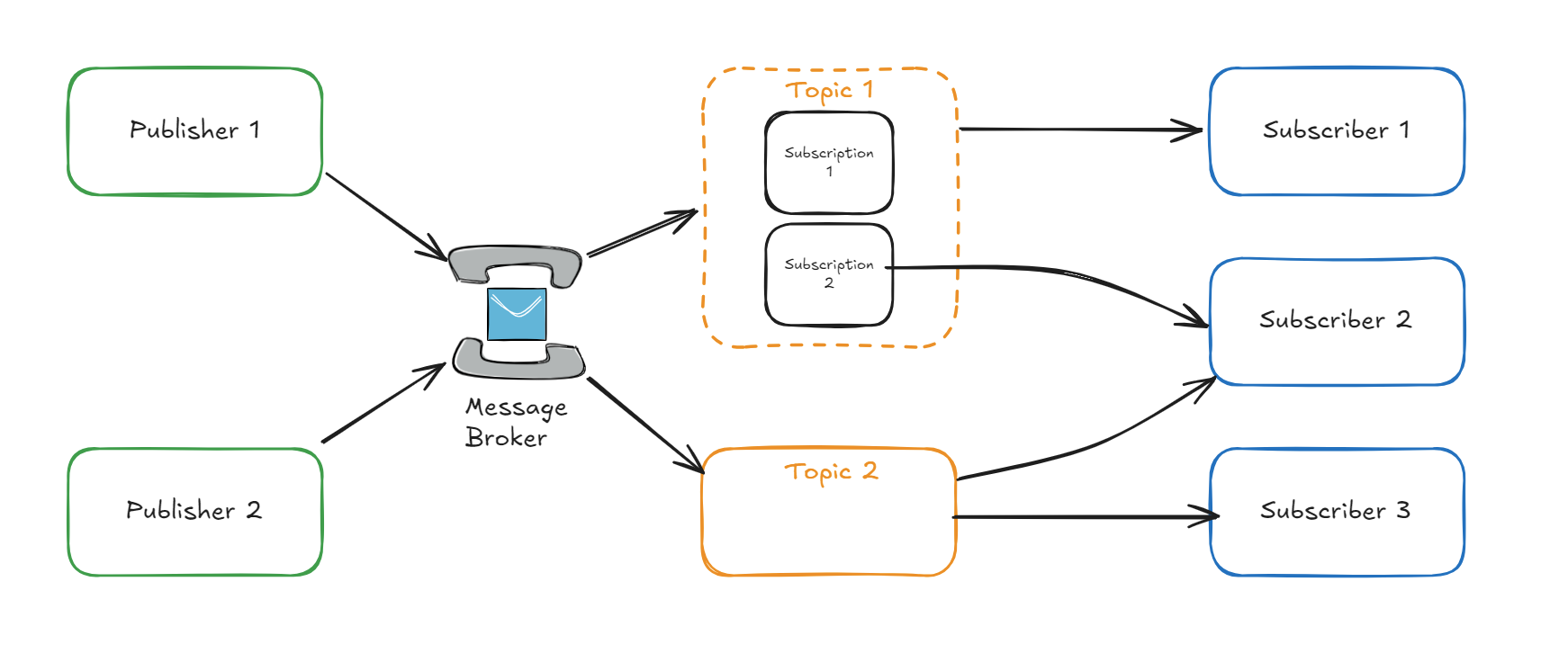

Pub/Sub solves this by putting a message broker between your services.

Here’s what this looks like:

Instead of your order service calling inventory, shipping, notifications, analytics, and payment directly, it does something simpler: it creates an “OrderPlaced” event with all the order details and publishes it to a message broker. That’s it. The order service is done. It moves on to processing the next order.

The message broker - think of it as a smart post office - receives the event and checks: “Who’s subscribed to OrderPlaced events?”

Inventory service is subscribed. Shipping service is subscribed. Notifications, analytics, payment - all subscribed.

The broker delivers a copy of the event to each one. They all receive it and process it independently, in parallel, without knowing about each other.

The Four Pub/Sub Core Components

Every Pub/Sub system has these components working together:

Publisher (your order service): Creates events and sends them to the broker. When an order is placed, it publishes an “OrderPlaced” event. This is “fire-and-forget” - the order service doesn’t wait for confirmation that anyone received or processed the event. It publishes and immediately returns to its core work.

Message Broker: The central intermediary that handles all communication. It receives events from publishers, maintains a registry of which services subscribe to which topics, and routes each event to all relevant subscribers. This is what makes decoupling possible - services never talk directly to each other, only to the broker.

Topic (like “OrderPlaced”): A logical channel that organizes events. Publishers send events to specific topics. Subscribers express interest in topics. Topics provide the filtering mechanism - subscribers only receive events from topics they care about.

Subscribers (inventory, shipping, notifications, etc.): Receive and process events. Each subscriber registers interest in one or more topics with the broker. When an event is published to a subscribed topic, the subscriber receives it and processes it according to its own logic. Multiple subscribers can process the same event, each doing something different with it.

This is How Pub/Sub Solves Tight Coupling

The order service doesn’t know about inventory, shipping, or notifications. It just publishes an event. Want to add fraud detection tomorrow? The fraud service simply subscribes to “OrderPlaced” events. Zero code changes to the order service. No redeployment. No coordination.

If the shipping service is down? Orders keep processing. The broker holds the events in shipping’s queue, and when shipping comes back online, it processes the backlog. One service’s problem doesn’t cascade to everyone else.

If the shipping team deploys a new API? Doesn’t matter. They’re reading events from the broker, not being called directly by the order service. They can deploy whenever they want.

This is the power of decoupling through asynchronous messaging. Services communicate through events, not through direct knowledge of each other. Teams work independently. Failures stay isolated. The coordination nightmare is over.

Now let’s look at how to implement this using Azure services.

Implementing Pub/Sub in Azure

Now that you understand how Pub/Sub decouples services, let’s look at how to build this.

Azure offers three messaging services that implement the Pub/Sub pattern. All three use the same core concept - publishers send events to topics, a message broker routes them, and subscribers receive them - but each is optimized for different scenarios.

The question isn’t “which service is best” - it’s “which service fits your specific problem?” Let’s explore each one.

Azure Service Bus: For Business-Critical Events

Think about a payment processing system. You publish “PaymentProcessed” events and your accounting service subscribes. Here’s the non-negotiable requirement: the accounting service MUST receive every single event. You can’t lose a transaction.

This is where Service Bus shines.

Guaranteed delivery: Service Bus guarantees delivery. If the accounting service is down when you publish “PaymentProcessed,” Service Bus queues the message and holds it. When accounting comes back online - hours later, even days later - it receives the event. No messages lost.

Message ordering: Some events need to happen in order. Bank account deposits must process before withdrawals, or you get overdrafts. Service Bus topics support sessions for this - all events with the same session ID (like the same customer account) are delivered to subscribers in the exact order you published them.

Dead-letter handling: When subscribers can’t process a message - maybe it’s malformed, maybe it triggers a bug - Service Bus doesn’t let that one bad message block everything else. It moves the problematic message to a dead-letter queue where you can investigate it while other messages keep flowing.

Service Bus is the right choice when:

- You’re publishing business-critical events like orders, payments, or inventory changes

- Subscribers need guaranteed delivery - every event must be processed

- Some events must be processed in order

Remember the shipping service that brought down your checkout? Service Bus prevents that. If shipping goes down, the broker holds the “OrderPlaced” events. Your checkout keeps processing orders. When shipping recovers, it catches up on its backlog.

Azure Event Grid: For Real-Time Reactions

Picture a photo sharing app. Users upload photos, and you need things to happen immediately - generate thumbnails, scan for inappropriate content, update the database, notify followers. Every second of delay is a second users are waiting.

Event Grid is built for this kind of instant response.

Near-instant delivery: When your image service publishes a “PhotoUploaded” event, Event Grid delivers it to all subscribers within seconds. Not minutes. Seconds. Your thumbnail generator, content scanner, and database updater all receive the event almost instantly and start processing in parallel.

Built-in Azure event sources: Event Grid has a unique advantage - it automatically publishes events from Azure resources. When a file lands in Blob Storage, Event Grid publishes the event. Your services just subscribe. You don’t write publishing code. The event appears, your subscribers react.

Serverless integration: Your subscribers can be Azure Functions - serverless code that only runs when events arrive. When “PhotoUploaded” fires, three functions spin up, each does its job, and they shut down. You only pay for the few seconds they ran. No servers sitting idle. No infrastructure to manage.

Event Grid is the right choice when:

- Subscribers need to react to events immediately - seconds matter

- You’re building serverless event-driven applications with Azure Functions

- Publishers are Azure resources like Blob Storage, Resource Manager, or custom apps

Remember adding fraud detection requiring six team deployments? With Event Grid and serverless functions, you deploy a fraud detection function that subscribes to “OrderPlaced.” No coordination. No touching the order service. Just subscribe and go.

Azure Event Hubs: For Massive Data Streams

Now imagine a completely different scenario. You have 100,000 IoT devices - sensors on factory equipment - each publishing temperature, pressure, and vibration readings every single second. That’s millions of events per second flowing into your system.

Event Hubs is designed for this kind of massive throughput.

Massive throughput: Event Hubs ingests millions of events per second and makes them available as a continuous stream. You can process the stream in real-time - watching for anomalies, calculating rolling averages, triggering alerts when sensors show concerning patterns. Or you can store the entire stream and analyze it later with machine learning.

Stream processing: This isn’t about individual discrete events like “OrderPlaced” or “PhotoUploaded.” This is about processing continuous data streams at scale. Subscribers can use stream processing tools like Azure Stream Analytics or Apache Spark to analyze the event stream in real-time - detecting patterns, calculating aggregates, identifying anomalies across thousands of readings per second.

Event capture: The entire event stream automatically gets stored to Blob Storage or Data Lake. Later, you can run batch processing jobs over historical data - training machine learning models, generating reports, finding trends. Real-time processing and historical analysis, both from the same event stream.

Event Hubs is the right choice when:

- Publishers are generating millions of events per second

- Subscribers need to process continuous event streams, not individual messages

- You need both real-time stream processing and batch historical analysis

- You’re working with IoT sensors, application logs, or clickstream data

The scenarios are different, but the pattern is the same: Publishers don’t know about subscribers. Communication goes through a message broker. Events fanout to multiple consumers. The difference is which type of events and what scale you’re operating at.

Choosing the Right Service

All three services implement Pub/Sub - the pattern you learned earlier. They all decouple publishers from subscribers through a message broker. They all support fanout to multiple consumers.

The question is what you’re building:

Can you afford to lose events?

- If no - every event is critical → Service Bus

- If yes - occasional loss is acceptable → Event Grid or Event Hubs

What’s your event volume?

- Hundreds to thousands per second → Service Bus or Event Grid

- Millions per second → Event Hubs

How fast do subscribers need to react?

- Immediately (seconds) → Event Grid

- Eventually (minutes okay) → Service Bus

- Continuous stream processing → Event Hubs

What are you publishing?

- Business events (orders, payments) → Service Bus

- State changes (file uploaded, resource created) → Event Grid

- Telemetry streams (sensors, logs) → Event Hubs

Now let’s look at best practices for implementing Pub/Sub successfully, regardless of which service you choose.

Best Practices for Implementing Pub/Sub

You’ve chosen your Azure service. Now let’s talk about the challenges every Pub/Sub implementation faces - and how to handle them.

These practices apply whether you’re using Service Bus, Event Grid, or Event Hubs. They address the common problems that emerge when publishers and subscribers communicate through a message broker.

Design Idempotent Message Handlers

With Pub/Sub, messages can be delivered more than once. Network issues, service restarts, or broker retries can cause the same event to reach a subscriber multiple times. This isn’t an edge case - it’s normal Pub/Sub behavior.

This means every subscriber must be idempotent - it must handle receiving the same message multiple times and produce the same result.

Here’s the pattern: Before processing a message, check if you’ve already processed its unique ID. Store processed message IDs in a database or cache:

if (alreadyProcessed(message.id)) {

acknowledge(message);

return; // Skip, already handled

}

processMessage(message);

recordAsProcessed(message.id);

acknowledge(message);Without this, you get double-processing. Your payment subscriber receives an “OrderPlaced” event with order ID 12345 and charges the customer’s card. The network fails before the subscriber can acknowledge receipt. The broker resends the event. Without the idempotency check, you charge the customer twice. With it, you detect the duplicate, skip processing, and acknowledge - preventing double-charging.

Include All Necessary Context in Events

Here’s a common mistake: Your “OrderPlaced” event only contains an order ID. Every subscriber has to call back to the order service to get order details - customer address, items, totals, everything.

This defeats the purpose of decoupling. Subscribers are still dependent on the publisher being available and responsive.

Publishers should include all the information subscribers need to process the event. Not just IDs - actual data:

// ❌ Minimal event - subscribers must call order service

{

"eventType": "OrderPlaced",

"orderId": "12345"

}

// ✅ Complete event - subscribers have what they need

{

"eventType": "OrderPlaced",

"orderId": "12345",

"customerId": "789",

"items": [...],

"totalAmount": 99.99,

"currency": "USD",

"timestamp": "2025-10-08T14:30:00Z"

}Your shipping subscriber receives “OrderPlaced” with full customer address and items. It creates the shipment immediately without calling the order service. Even if the order service is down for maintenance, shipping continues processing the backlog of events.

Use Dead-Letter Queues for Failed Messages

Sometimes a message can’t be processed. Maybe it’s malformed. Maybe it references data that doesn’t exist. Maybe it triggers a bug in your subscriber code.

Without dead-letter handling, this “poison message” sits in your queue, gets retried endlessly, and blocks other messages from being processed.

Configure your Azure service to move failed messages to a dead-letter queue after a set number of retry attempts. Then monitor the dead-letter queue for new messages, investigate why they failed, fix the underlying issue, and optionally reprocess them.

A malformed “PaymentProcessed” event arrives with a negative payment amount (due to a publisher bug). Your accounting subscriber fails to process it. After 5 retry attempts, Service Bus moves it to the dead-letter queue. You’re alerted, fix the publisher bug, and your queue continues processing valid messages - one bad message didn’t block thousands of good ones.

These three practices address the most common implementation challenges in Pub/Sub systems. They help you build subscribers that are resilient, efficient, and handle the realities of distributed messaging.

But Pub/Sub isn’t always the right choice. Let’s talk about when you shouldn’t use it.

When Pub/Sub is NOT the Right Pattern

Pub/Sub solves tight coupling through asynchronous messaging. But it’s not the answer to every distributed systems problem. Here’s when other patterns fit better.

When You Need Immediate Responses

The pattern mismatch: Pub/Sub is asynchronous. Publishers send events and move on without waiting for responses.

When this doesn’t work: Your checkout flow needs to know if payment succeeded before showing the order confirmation. You can’t publish a “ProcessPayment” event and hope for the best - you need an immediate yes/no answer.

The pattern you need: Request-Response. The caller waits for a direct response from the service it called. Payment succeeds or fails, and you know immediately which path to take.

When You Need Strict Ordering Across All Consumers

The pattern mismatch: Pub/Sub delivers events to multiple subscribers independently. Coordinating strict ordering across all of them is complex.

When this doesn’t work: You’re processing financial ledger entries where every transaction must be processed in exact sequence by all subscribers. Out-of-order processing corrupts the ledger.

The pattern you need: Event Sourcing. All changes are captured as events in an append-only log. Order is guaranteed by design, and you can replay events to reconstruct state.

When Your System is Small and Simple

The pattern mismatch: Pub/Sub adds operational complexity - message brokers, monitoring, idempotency, eventual consistency.

When this doesn’t work: You have 3-5 services, a small team, coordinated deployments. The overhead of Pub/Sub outweighs its benefits.

The pattern you need: Start simple. Direct service calls. Add complexity only when you hit the pain points - deployment coupling, cascading failures - that justify it.

Here’s the key insight: Most real systems use multiple patterns. Pub/Sub for decoupling and async workflows. Request-Response for immediate answers. Sagas for distributed transactions. The skill is recognizing which pattern fits which problem.

But what happens when services fail? Even with Pub/Sub decoupling your services, subscribers will fail - databases go down, networks hiccup, downstream APIs return errors. How do you handle that?

That’s where the Retry pattern comes in. Let’s explore it next.

What’s Next

You now understand how Pub/Sub decouples services through asynchronous messaging.

Publishers send events to a message broker. Subscribers receive them independently. Services stay loosely coupled - enabling independent deployment, fanout communication, and isolated failures.

You’ve learned how to implement Pub/Sub using Azure services - Service Bus for reliable business messaging, Event Grid for real-time reactive systems, and Event Hubs for massive data streams. You know the key practices: design idempotent handlers, include necessary context in events, and use dead-letter queues for failed messages.

And you understand when other patterns fit better - Request-Response for immediate answers, Event Sourcing for strict ordering.

But there’s a reality we haven’t fully addressed yet.

Your services are decoupled. Your order service publishes “OrderPlaced” and subscribers process it independently. Perfect.

Then a subscriber tries to process the event, and the database connection times out. Or the downstream API returns a 503. Or there’s a network hiccup. The event fails to process.

What happens next?

Do you lose the event? Do you retry immediately and hit the same error? Do you keep retrying until you exhaust your resources? How do you distinguish between temporary failures (retry them) and permanent failures (send to dead-letter queue)?

This is where the Retry pattern comes in.

In the next article, we’ll explore how to handle transient failures gracefully. You’ll learn when to retry, how long to wait between attempts, when to give up, and how to prevent retry storms from overwhelming your system.

The journey from monolith to microservices continues. Let’s keep building.