Introduction to Cloud-Native Architecture Patterns: Building Resilient Distributed Systems

-

Ahmed Muhi

Ahmed Muhi - 07 Oct, 2025

Introduction

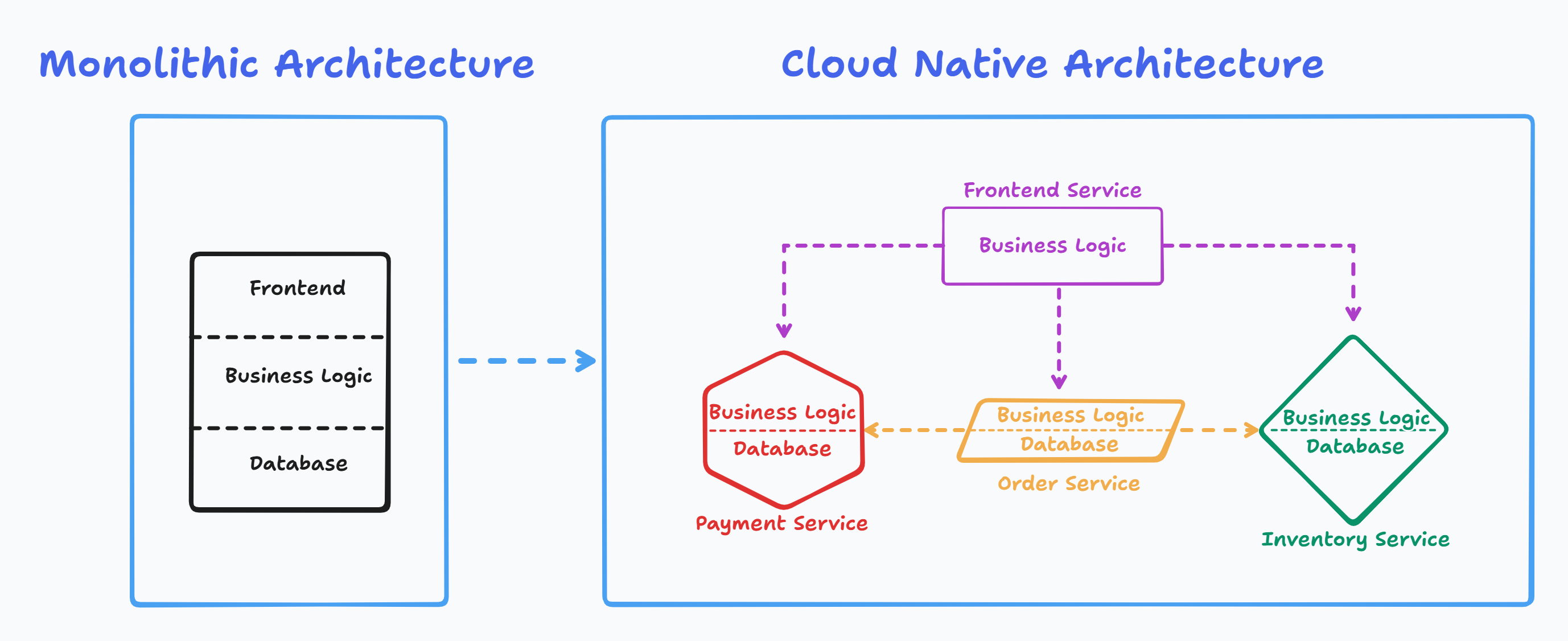

Building applications used to be simpler. You had one server running your application, one database, and when something broke, you knew exactly where to look. Everything was in one place.

Then your business grew. That single server couldn’t handle the load anymore. You needed to scale. You needed different teams to work independently. You needed to deploy updates without taking the entire system down.

So you did what everyone does: you broke your application apart into smaller, independent services.

And suddenly, everything got complicated.

Now when something breaks, it doesn’t just affect one part of your system - it ripples through everything:

- A slow database query in your payment service somehow causes your homepage to stop loading

- A spike in traffic to your search feature brings down your checkout flow

- A network hiccup between two services turns into a full outage

These aren’t bugs in your code. They’re not configuration mistakes. They’re the fundamental characteristics of distributed systems. When you split your application across multiple services, multiple machines, and multiple network calls, you’ve entered a different world with different rules.

This is what cloud-native architecture patterns are designed to solve.

These patterns help you build systems that expect failure and design for it from the start. They’re not about moving monoliths to the cloud - they’re about embracing distributed systems as your reality and building resilience into every decision.

These patterns are proven solutions to the problems that appear when you build distributed systems. They help you:

- Handle failures gracefully

- Scale efficiently

- Keep services loosely coupled

They’re the collective wisdom of thousands of engineers who’ve faced these same challenges - at Netflix, Microsoft, Google, and countless other companies running systems at scale.

This series will teach you these patterns - not just what they are, but why they exist and when to use them. We’ll start with the problems, show you the pain of not solving them, and then introduce the patterns as solutions.

Let’s begin.

About This Series

This is Part 1 of a series where we tackle the real problems of distributed systems - one pattern at a time.

We’re not going to throw a catalog of patterns at you. Instead, we’ll start with the problems you’re actually facing: services that can’t talk to each other without breaking, transactions that span databases, failures that cascade through your entire system.

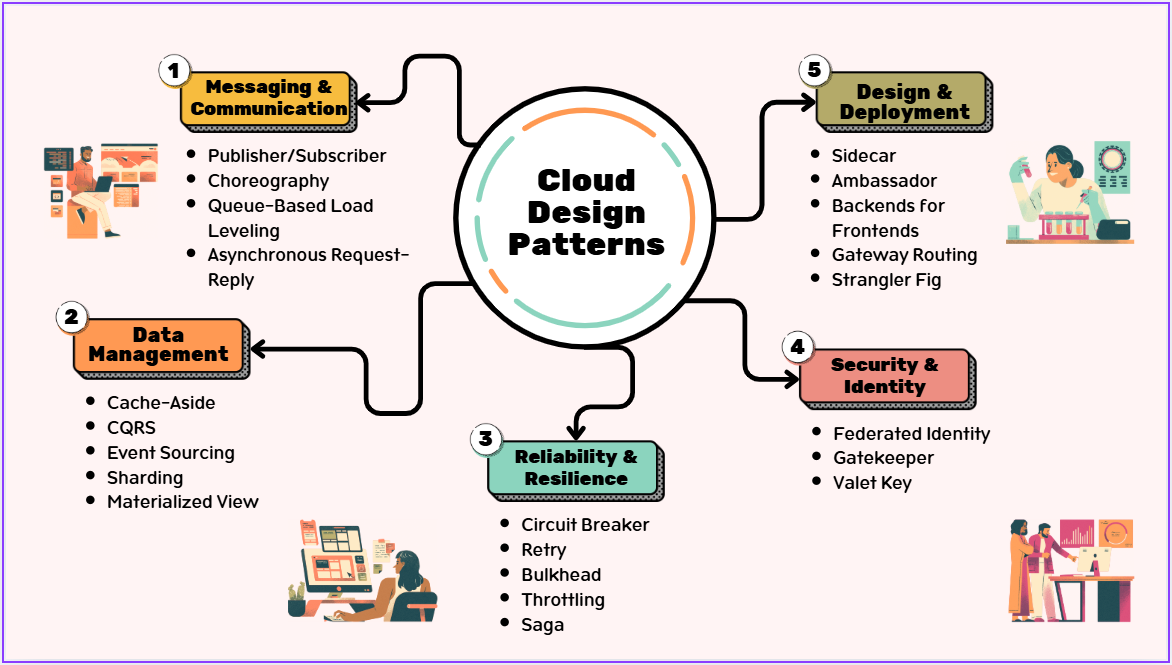

Then we’ll show you the patterns that solve them - Pub/Sub for decoupling, Saga for distributed transactions, Circuit Breaker for resilience, and more.

In this series:

- Introduction to Cloud-Native Patterns ← You are here

- Publisher-Subscriber Pattern (Pub/Sub)

- Saga Pattern (Distributed Transactions)

- Circuit Breaker Pattern

- Retry

- CQRS Pattern

- Event Sourcing

- And more as we go…

Each article is self-contained, but they build on each other. Start here, or jump to the pattern you need.

The Reality of Distributed Systems

When you split your application into distributed services, you gain real advantages. Different teams work independently. Services scale based on their individual needs. You deploy updates without taking the entire system down.

These benefits are why everyone builds this way.

But here’s what nobody tells you upfront: you’ve traded one set of problems for an entirely different set.

In a monolith, when you call a function, it works or it doesn’t. The outcome is immediate and deterministic. In distributed systems, every service call crosses a network. And networks are fundamentally unreliable - they drop packets, add latency, fail in ways you can’t predict.

Here’s what this looks like in practice:

Your e-commerce site is humming along. Orders flowing. Everything green on the dashboard. Then the analytics service - the one that just tracks metrics, doesn’t touch any critical path - starts having database issues. It gets slow. Really slow.

Except your order service calls analytics on every order. So now every order waits 30 seconds for analytics to time out. Your checkout slows to a crawl. Customers see spinning wheels. Support tickets flood in.

The issue? Your checkout didn’t break. Analytics broke. But your entire ordering system went down anyway.

This isn’t a bug you fix. This isn’t a configuration you tune. This is the fundamental reality of distributed systems. When services talk to each other over networks, one service’s problems become everyone’s problems.

Network failures aren’t rare exceptions - they’re constant background noise. Latency isn’t something you optimize later - it’s baked into every service call. And when something goes wrong across 10 services, figuring out which one failed and why becomes exponentially harder.

Cloud-native architecture patterns exist to help you design for this reality from the start.

What Are Cloud-Native Architecture Patterns?

Cloud-native architecture patterns are proven, reusable solutions to the common problems you encounter when building distributed systems. They’re not code libraries or frameworks - they’re design approaches that have been battle-tested by thousands of companies running systems at scale.

Think of them like design patterns in software development. The Factory pattern helps you create objects. The Observer pattern helps you handle changes. These patterns don’t prescribe specific code - they describe solution approaches you can implement in any language.

Cloud-native architecture patterns work the same way, but at a higher level. They address architectural challenges:

- How do you prevent a failing service from taking down your entire system?

- How do you handle transactions that span multiple services?

- How do you debug a request that touches 10 different services?

Each pattern provides a conceptual solution you can adapt to your specific technology stack.

Here’s the key: You don’t learn these patterns by memorizing a catalog. You learn them by recognizing problems. When your system experiences cascading failures, you reach for resilience patterns. When deployments become risky because services are tightly coupled, you reach for decoupling patterns. When you can’t debug distributed requests, you reach for observability patterns.

The patterns are tools. The skill is knowing when to reach for each tool.

That’s what this series will teach you - how to recognize problems and reach for the right solutions. Let’s look at what that means in practice.

What This Looks Like in Practice

Here are two challenges that show up in almost every distributed system - challenges you’ve probably already encountered.

Challenge: When one service affects everything else

Your product pages load instantly. Then the recommendation service - the one suggesting “customers also bought” items - starts struggling with a slow database query.

Every product page calls the recommendation service. Now every page load waits for recommendations to time out. Your entire site slows to a crawl.

Recommendations didn’t bring down your site. But they did anyway.

Challenge: When adding features requires coordinating multiple teams

Your product team wants to add fraud detection to orders. Now you need to modify the order service, coordinate deployments across multiple teams, and ensure nothing breaks when you change how orders flow.

One feature. Six teams. Multiple deployment windows.

This is the reality of distributed systems. Services affect each other. Changes ripple. Cloud-native patterns give you ways to isolate failures and decouple services so your system doesn’t fall apart when one piece struggles.

Let’s start solving these challenges.

What’s Next

The first challenge we’re tackling? Tight coupling.

When services call each other directly, adding one feature requires coordinating multiple teams. One service’s problem becomes everyone’s problem. This is the challenge that shows up first in almost every distributed system.

Pub/Sub solves this.

Instead of services calling each other directly, they communicate through a message broker. The order service publishes an “OrderPlaced” event and moves on. Other services - inventory, shipping, fraud detection - subscribe and process independently. Services stay decoupled. Teams deploy independently. Failures stay isolated.

Pub/Sub appears everywhere: event-driven architectures, microservices communication, real-time data pipelines, background job processing. Understanding this pattern well gives you a foundation for understanding many others.

That’s where we’re starting.

Continue the Series

Ready to dive in?

Part 2: Publisher-Subscriber Pattern →

In the next article, we tackle tight coupling head-on. You’ll see exactly why direct service-to-service calls create deployment nightmares, how Pub/Sub decouples services through asynchronous messaging, and the trade-offs you must understand before implementing it.

You’ll learn:

- What Pub/Sub is and how it works (with concrete examples)

- When to use Azure Service Bus vs Event Grid vs Event Hubs

- The operational challenges: message delivery guarantees, idempotency, eventual consistency

- When to use Pub/Sub and when to choose alternatives

Each article in this series follows the same approach: Problem first. Pattern second. Trade-offs always.