Introduction to Kubernetes: Your Journey Begins Here

-

Ahmed Muhi

Ahmed Muhi - 23 Aug, 2024

Introduction

Welcome back, everyone! 👋 I hope you’re as excited as I am to continue our cloud native journey together. So far, we’ve covered the essentials of containers—from Docker to CRI-O and containerd—building a rock-solid foundation. Now it’s time to take the next step: Kubernetes.

You might be thinking, “Isn’t Kubernetes that complex container orchestration system everyone keeps talking about?” It certainly has a reputation, but if we approach it one step at a time—just like we did with containers—we’ll see it’s more approachable than you might expect.

In this article, we’ll:

- Get a clear view of what Kubernetes is

- Explore why it’s vital in the cloud native landscape

- See how it builds on everything you’ve learned about containers so far

By the end, you’ll have a solid grasp of Kubernetes’ core purpose and why it’s become the standard for managing containerized applications at scale. Ready to begin this new chapter in our cloud native story? Let’s dive in and discover what makes Kubernetes such a game-changer!

What Is Kubernetes?

Alright, let’s tackle the big question: What exactly is Kubernetes? Kubernetes, often abbreviated as K8s (don’t ask me why, but it’s pronounced “kates”), is an open-source container orchestration platform. Originally developed by Google and open-sourced in 2014, it’s now maintained by the Cloud Native Computing Foundation (CNCF). Over the years, it has become the de facto standard for deploying, scaling, and managing containerized applications at scale.

From One Container to Many

You might be wondering, “Why do we need Kubernetes if we already have containers?” Great question! While containers solve the problem of packaging and running applications consistently, Kubernetes solves the problems that arise when you’re running many containers across multiple machines.

Let me give you a real-world scenario. Imagine you’re running a popular e-commerce website. During normal times, you might need 10 containers running your application. But what happens during a big sale when traffic spikes? You need to quickly scale up to 50 containers to handle the load. Then, after the sale, you need to scale back down to save resources. Doing this manually would be a nightmare! This is where Kubernetes shines—it can automatically scale your application up or down based on demand.

But that’s not all. Kubernetes also helps with things like:

- Distributing network traffic to ensure your application is always available

- Rolling out new versions of your application without downtime

- Automatically restarting containers that fail

- Storing and managing sensitive information, like passwords and API keys

As we continue our Kubernetes journey, you’ll see how these concepts come together to help you create powerful, scalable, and resilient applications. But for now, the key thing to remember is this: Kubernetes makes managing containerized applications at scale not just possible, but actually pretty straightforward once you get the hang of it.

Key Benefits of Kubernetes

Now that we’ve seen the big picture of Kubernetes, let’s explore why so many organizations rely on it to power their applications. Here are some key benefits you’ll notice right away:

1. Scalability and High Availability

This is where Kubernetes shines - when your traffic could suddenly spike from hundreds to thousands of users. It automatically scales your application based on CPU usage, memory consumption, or custom metrics you define. Kubernetes automatically deploys more instances to handle the increased load. Plus, by spreading your application across multiple machines, Kubernetes ensures your service stays available even if some servers go down.

2. Self-Healing

This is where Kubernetes acts as your system guardian. When a container group (called a Pod) crashes or a server (called a node) fails, Kubernetes immediately detects the issue and takes action. It automatically creates replacement containers and redistributes the workload to healthy servers, ensuring your application keeps running smoothly. Plus, by continuously monitoring the health of your components, Kubernetes handles failures before they can impact your users.

Note: We’ll explore Pods, nodes, and other Kubernetes concepts in detail in the upcoming sections.

3. Automated Rollouts and Rollbacks

Deploying new versions of your application can be tricky, but Kubernetes simplifies the process. You can roll out new versions gradually across your servers and watch how they perform in real-time. If something goes wrong, Kubernetes can automatically roll back to a previous version. This means you can deploy more frequently with confidence while minimizing risk.

4. Service Discovery and Load Balancing

In modern applications, different parts of your software (called services) handle specific functions - like one service for user authentication, another for processing orders, and so on. These services need to find and talk to each other reliably. This is where Kubernetes shines. It gives each service a consistent way to be discovered by other services, and then intelligently distributes incoming requests across all available instances of that service. So even if you have multiple copies of the same service running, Kubernetes ensures the workload is balanced efficiently.

5. And There’s More…

Kubernetes doesn’t stop there. It securely handles sensitive information like passwords and API keys, manages storage for your applications, and lets you describe your entire system in simple configuration files. But don’t worry about all that just yet - we’ll explore these powerful features in upcoming articles as we build on these fundamentals.

Now that you’ve seen what Kubernetes can do, let’s peek under the hood to understand how it accomplishes all of this. In the next section, we’ll explore the key components that make up a Kubernetes system.

High-Level Architecture of Kubernetes

Alright, now that we’ve explored the benefits of Kubernetes, let’s take a peek under the hood. Don’t worry if this seems a bit complex at first - we’re just going to get a bird’s eye view for now. We’ll dive deeper into each component in future articles.

At its core, Kubernetes is designed around a client-server architecture. When you’re running Kubernetes, you’re running what’s called a Kubernetes cluster. Let’s break this down into two main parts: the control plane and the nodes.

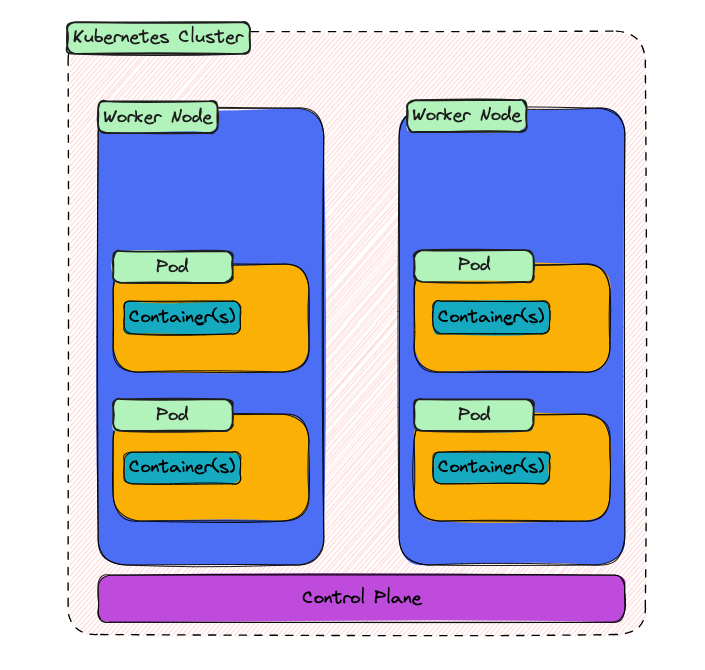

The image above shows a high level architecture of a Kubernetes Cluster

1. The Control Plane

Think of the control plane as the brain of Kubernetes. It’s responsible for making global decisions about the cluster, as well as detecting and responding to cluster events. Here are the key components:

- API Server: This is the front door to the Kubernetes control plane. All communication, both internal and external, goes through the API server.

- etcd: This is a reliable distributed data store that persistently stores the cluster configuration.

- Scheduler: This watches for newly created pods (groups of containers) and assigns them to nodes.

- Controller Manager: This runs controller processes, which regulate the state of the cluster, like ensuring the right number of pods are running.

2. The Nodes

Nodes are the workers of a Kubernetes cluster. They’re the machines (physical or virtual) that run your applications. Each node includes:

- Kubelet: This is the primary node agent. It watches for pods that have been assigned to its node and ensures they’re running.

- Container Runtime: This is the software responsible for running containers (like containerd, or CRI-O - remember those?).

- Kube-proxy: This maintains network rules on nodes, allowing network communication to your pods.

Now, you might be wondering, “How do I interact with all of this?” Well, that’s where kubectl comes in. It’s a command-line tool that lets you control Kubernetes clusters. Think of it as your direct line to communicating with the API server.

Here’s a simplified view of how it all fits together:

- You use kubectl to send commands to the API server.

- The API server validates and processes your request.

- The scheduler decides where to run your application.

- The kubelet on the chosen node is instructed to run your application.

- Your application runs on the node, and Kubernetes keeps it running according to your specifications.

I know this might seem like a lot to take in, but don’t worry! As we progress through our Kubernetes journey, we’ll explore each of these components in more detail. For now, the key thing to understand is that Kubernetes has a distributed architecture designed for scalability and resilience.

Remember, every expert was once a beginner. When I first looked at the Kubernetes architecture, it seemed overwhelming. But as we break it down piece by piece in the coming articles, I promise it will start to make more and more sense.

In the next section, we’ll take a look at some basic Kubernetes objects - the building blocks you’ll use to describe your applications in Kubernetes. Ready to start putting the pieces together? Let’s keep going!

Basic Kubernetes Objects

Now that we have a high-level view of Kubernetes architecture, let’s talk about some of the basic building blocks you’ll be working with in Kubernetes. These are called Kubernetes objects, and they’re the core of how you’ll define your application in Kubernetes.

Remember, we’re just getting an overview here. We’ll dive deeper into each of these in future articles, so don’t worry if you don’t grasp everything right away. The goal is to get familiar with the names and basic concepts.

1. Pods

Let’s start with the smallest unit in Kubernetes: the Pod. A Pod is like a wrapper around one or more containers. If you’re thinking, “Wait, isn’t Kubernetes all about managing containers?”, you’re on the right track! Pods add an extra layer of organization and shared resources for your containers.

Pods are the basic building blocks in Kubernetes. When you deploy an application, you’re actually deploying a Pod (or usually, multiple Pods).

2. ReplicaSets

Next up, we have ReplicaSets. These ensure that a specified number of Pod replicas are running at any given time. If a Pod fails, the ReplicaSet creates a new one to maintain the desired number.

Think of a ReplicaSet as a supervisor making sure you always have the right number of workers (Pods) on the job.

3. Deployments

Deployments are a higher-level concept that manages ReplicaSets and provides declarative updates to Pods. When you want to deploy a new version of your application, you’ll typically work with Deployments.

Deployments allow you to describe a desired state (like “I want three replicas of my web server running”), and Kubernetes will work to maintain that state.

4. Services

Services are all about providing a consistent way to access your Pods. Remember, Pods can come and go (like when scaling up or down), so their IP addresses aren’t reliable. Services provide a stable endpoint to connect to your Pods.

There are different types of Services, like ClusterIP (internal access), NodePort (external access on a port), and LoadBalancer (uses cloud provider’s load balancer).

5. Namespaces

Finally, let’s talk about Namespaces. These are ways to divide cluster resources between multiple users or projects. Think of them as virtual clusters within your Kubernetes cluster.

Namespaces are great for organizing different environments (like development, staging, and production) or different applications within the same cluster.

Now, you might be thinking, “Wow, that’s a lot of new terms!” And you’re right, it is. But here’s the thing: each of these objects solves a specific problem in managing containerized applications at scale. As we go deeper in future articles, you’ll see how they all work together to create powerful, flexible application deployments.

Remember, you don’t need to memorize all of this right now. The important thing is to start getting familiar with these terms. As we progress, we’ll explore each of these objects in more detail, and you’ll have plenty of opportunities to see them in action.

In our next section, we’ll walk through a simple example to see how some of these objects might work together in a real-world scenario. Ready to see Kubernetes in action? Let’s keep going!

Kubernetes in Action: A Simple Example

Now that we’ve covered the basic components and objects in Kubernetes, let’s walk through a simple example to see how these pieces fit together. Don’t worry, we won’t be diving into actual code or commands just yet – this is more of a conceptual walkthrough to help you visualize how Kubernetes works.

Let’s imagine we’re deploying a simple web application. We’ll call it “MyAwesomeApp”. Here’s how we might set it up in Kubernetes:

1. Creating a Deployment

First, we’d create a Deployment for our application. In this Deployment, we’d specify things like:

- The container image to use (let’s say it’s myawesomeapp:v1)

- The number of replicas we want (let’s say 3)

- Any environment variables or configuration our app needs

When we create this Deployment, Kubernetes springs into action:

- The Deployment creates a ReplicaSet

- The ReplicaSet ensures that 3 Pods are created, each running our MyAwesomeApp container

2. Setting up a Service

Next, we’d create a Service to make our application accessible. Let’s say we create a LoadBalancer service:

- This service gets an external IP address

- It routes traffic to our Pods

3. Scaling the Application

Now, let’s say our application becomes really popular and we need to scale up. We could update our Deployment to specify 5 replicas instead of 3. Kubernetes would:

- Update the ReplicaSet

- Create two new Pods

- The Service automatically starts routing traffic to the new Pods

4. Updating the Application

Time for a new version! We update our Deployment to use myawesomeapp:v2. Kubernetes then:

- Creates a new ReplicaSet with the new version

- Gradually scales down the old ReplicaSet and scales up the new one

- This results in a rolling update with zero downtime

5. Self-healing in Action

Oops! There’s a bug in v2 causing one of the Pods to crash. Kubernetes:

- Notices the Pod has crashed

- Automatically creates a new Pod to replace it

- The Service continues routing traffic to the healthy Pods

Throughout all of this, Kubernetes is constantly working to maintain the desired state we’ve specified. It’s scaling, updating, and healing our application without us having to manually intervene.

Now, I know we’ve skipped over a lot of details here. In a real-world scenario, you’d be using kubectl or a Kubernetes dashboard to create these objects, monitor your application, and make changes. But I hope this example gives you a sense of how the different Kubernetes objects we’ve discussed work together to deploy and manage an application.

The power of Kubernetes really shines in scenarios like this. It’s handling all the complex orchestration behind the scenes, allowing us to focus on our application rather than the intricacies of how it’s deployed and managed.

In future articles, we’ll dive deeper into each of these steps, looking at the actual YAML definitions and kubectl commands you’d use. But for now, I hope this conceptual walkthrough helps you see how Kubernetes can make managing containerized applications easier and more efficient.

In our next and final section, we’ll look ahead to what’s coming up in our Kubernetes learning journey. Let’s keep going!

What’s Next in Our Kubernetes Journey?

Congratulations! You’ve just taken your first big step into the world of Kubernetes. How does it feel? Exciting, right? We’ve covered a lot of ground today, from understanding what Kubernetes is, to exploring its architecture, and even walking through a simple example.

But guess what? This is just the beginning of our Kubernetes adventure!

In the upcoming articles, we’re going to dive deeper into each of the concepts we’ve touched on today. Here’s a sneak peek of what you can look forward to:

- Kubernetes Objects in Detail: We’ll explore Pods, Deployments, Services, and more, looking at how to define them and what they do.

- Hands-on with kubectl: You’ll learn how to interact with a Kubernetes cluster using the command-line tool kubectl.

- Setting Up Your First Cluster: We’ll walk through setting up a local Kubernetes cluster using tools like Minikube or kind.

- Deploying Your First Application: You’ll get to deploy a real application to Kubernetes, seeing firsthand how all the pieces fit together.

- Kubernetes Networking: We’ll demystify how networking works in Kubernetes, including concepts like CNIs and Ingress.

- Storage in Kubernetes: Learn how Kubernetes handles persistent storage for your applications.

- Kubernetes Security: Understand how to keep your Kubernetes clusters and applications secure.

- Advanced Topics: We’ll touch on more advanced concepts like Operators, Helm, and GitOps.

Remember, learning Kubernetes is a journey, not a destination. It’s okay if some concepts don’t click right away - that’s normal! The key is to stay curious, keep practicing, and don’t be afraid to experiment.

You’ve taken a big step today in your cloud native journey. Be proud of what you’ve learned! Kubernetes might seem complex now, but I promise, with each article and each bit of practice, it’ll become clearer and more familiar.

Thank you for joining me on this introduction to Kubernetes. I can’t wait to dive deeper with you in our upcoming articles. Until then, happy learning, and may your pods always be healthy, and your clusters always be resilient! 😊