Introduction

You’ve come a long way.

You’ve built Docker images, launched containers, wired up networks, and even mounted persistent volumes to store data. You’ve seen your app come alive — running on your machine, in the cloud, connected and stateful.

But here’s the plot twist:

Docker doesn’t actually run your containers.

Wait, what?

That terminal command you’ve been using — docker run — does a lot, but it’s not the thing doing the low-level work of launching your application. It’s more like the conductor of an orchestra. The one doing the real heavy lifting?

That’s something called a container runtime.

And in this article, you’re going to learn what a container runtime is, why it matters, and what’s actually happening behind the scenes when your container starts.

This is a pivotal moment in your container journey — where we lift the hood and see how Docker (and Kubernetes too) hands off work to a different layer of software entirely.

By the end of this guide, you’ll understand:

- What a container runtime is

- The difference between Docker, containerd, and runc

- Why Kubernetes uses runtimes like containerd and CRI-O

- How this knowledge helps you debug, optimize, and architect containerized systems like a pro

Let’s start with the big question:

If Docker doesn’t run containers… who does?

Act 2 – What Is a Container Runtime?

Let’s zoom in on what really happens when a container starts.

Behind the scenes, there’s a lower layer — one that most developers never touch directly — quietly doing the work.

It’s called the container runtime.

Its job is simple, but critical:

Take your container image, prepare it, and run it as a working, isolated process on your machine.

That means:

- Getting the image onto your system

- Unpacking it into a usable filesystem

- Creating the container process

- Giving it access to the resources it needs — like CPU, memory, and storage

This entire job — from pulling an image to managing a running container — is handled by the runtime.

Let’s visualise it.

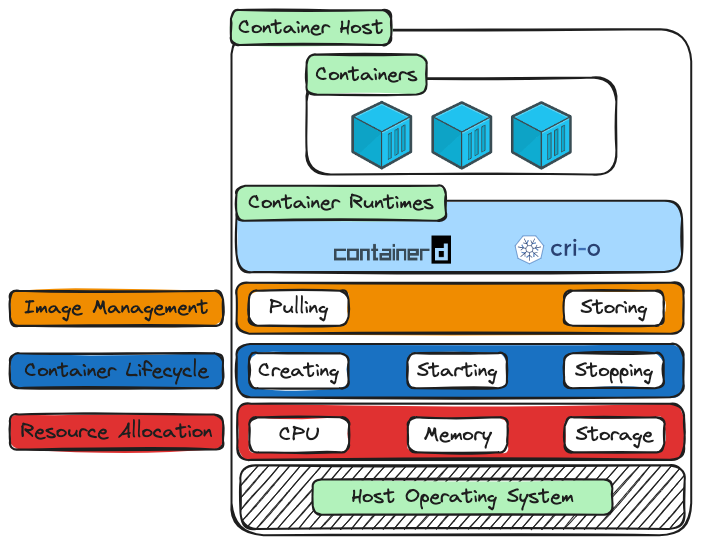

You can think of this diagram as a cutaway view of how containers actually run on a host machine.

At the top, we have the container host — that’s the machine where everything lives.

Inside it: one or more containers, each running an application in its own isolated space.

But containers don’t run themselves.

They rely on a container runtime — a background service that does the actual work of managing them.

In this diagram, you’ll see two popular examples: containerd and CRI-O.

These are real implementations of a container runtime. You don’t interact with them directly most of the time, but they’re doing the heavy lifting.

What does the runtime actually handle?

That’s what the coloured blocks below show:

-

Image Management:

The runtime is responsible for pulling images from registries and storing them locally. Before anything can run, the runtime makes sure the image is available. -

Container Lifecycle:

Once the image is ready, the runtime takes care of the lifecycle — creating the container, starting it, and stopping it when it’s done. -

Resource Allocation:

Containers don’t get unlimited access to the host. The runtime controls how much CPU, memory, and storage each one can use. It enforces boundaries so containers don’t interfere with one another.

And all of this ultimately runs on top of the host operating system — typically Linux — which provides the features that make isolation and process control possible.

So while you might be using docker run or a YAML file in Kubernetes, what’s actually running your containers is a runtime like containerd or CRI-O.

They don’t just launch containers — they manage the entire lifecycle, enforce limits, and make sure each container plays by the rules.

Understanding this layer gives you a clearer picture of what’s really happening behind the scenes.

In the next act, we’ll zoom in on that runtime box and split it open — because there’s more than one layer inside it.

Let’s take a look.

Act 3 – Two Layers of Runtimes: High-Level vs Low-Level

So far, we’ve treated the container runtime as a single component.

But here’s the interesting part:

It’s not just one thing.

Inside that runtime layer, there are two parts working together — each with a distinct job.

Let’s break it down.

High-Level Runtime: The Orchestrator

The high-level runtime is what we’ve already met — something like containerd or CRI-O.

It doesn’t start containers directly.

Instead, it handles all the coordination:

- Pulling the image

- Preparing the container configuration

- Managing the lifecycle (create, start, stop)

- Tracking the container’s status

- Handling communication with tools like Docker or Kubernetes

It’s the runtime you’d interact with if you ever went beyond Docker — though most people never need to.

Low-Level Runtime: The Executor

Once the container is ready to run, the high-level runtime passes the job to a second component — the low-level runtime.

This is usually a tool called runc.

runc takes the container specification — the image, the config, the isolation rules — and executes it as a real process on the host system.

This is the final step in the chain.

It’s the part that actually creates the container process using Linux kernel features like cgroups and namespaces.

In simple terms:

- The high-level runtime prepares the container

- The low-level runtime runs it

They work together, like a builder and a foreman:

One handles the plan.

The other makes it real.

This two-layer model is what makes modern containers portable, modular, and powerful.

It also explains why, when something goes wrong — like a container failing to start — the logs or errors may come from either layer.

In the next section, we’ll take a closer look at containerd and CRI-O specifically — and see how they fit into real-world container platforms.

Let’s keep going.

Act 4 – Meet containerd and CRI-O: The Two Runtime Giants

By now, you know the high-level runtime is the one doing the orchestration — pulling images, managing container lifecycles, and preparing everything before handing it off to the low-level runtime.

But there’s not just one high-level runtime out there.

There are two major ones used in real-world platforms today:

containerd and CRI-O.

Let’s meet them.

containerd: The All-Purpose Workhorse

containerd (pronounced container-dee) started out as a part of Docker.

It was the internal runtime that Docker used under the hood to manage containers.

Eventually, it was pulled out into its own independent project — and is now maintained by the Cloud Native Computing Foundation (CNCF), the same organisation behind Kubernetes.

Today, containerd is used everywhere:

- It powers the Docker Engine

- It’s the default runtime in most Kubernetes distributions (like AKS, GKE, and k3s)

- It’s designed to be general-purpose and flexible — not just tied to Kubernetes

What makes containerd notable is its broad compatibility and clean architecture.

It handles everything you’d expect from a high-level runtime: pulling images, creating containers, managing lifecycle, and delegating to runc underneath.

It’s also highly modular — so platforms and tooling can extend or integrate with it easily.

If you’ve ever used Docker… you’ve used containerd.

You just didn’t see it.

CRI-O: Built for Kubernetes, and Only Kubernetes

CRI-O takes a different path.

It wasn’t designed for general-purpose container use.

It was built specifically for Kubernetes, and nothing else.

The “CRI” in CRI-O stands for Container Runtime Interface — a Kubernetes-defined contract that any runtime must support in order to plug into the Kubernetes engine.

The “-O” stands for “Open,” as in Open Container Initiative.

In other words, CRI-O exists to be a minimal, lightweight runtime that speaks Kubernetes fluently — and only Kubernetes.

It doesn’t try to do anything outside that scope.

It doesn’t aim to be pluggable into other systems.

It’s focused, fast, and purpose-built.

This makes it a great fit for environments that want a tightly coupled, Kubernetes-first experience — like Red Hat OpenShift, where CRI-O is the default runtime.

containerd vs. CRI-O at a Glance

| Feature | containerd | CRI-O |

|---|---|---|

| Origin | From Docker (now CNCF) | From Red Hat, for Kubernetes |

| Use Case | General-purpose, Docker & K8s | Kubernetes-only |

| Default In | Docker, AKS, GKE, k3s | OpenShift |

| Extensibility | Highly modular and pluggable | Minimal, focused on CRI compliance |

| Low-level runtime used | runc |

runc |

| CNCF Project | ✅ Yes | ✅ Yes |

You don’t have to pick between them in most environments — the choice is usually made for you by the platform you’re running on.

But knowing what they are — and what they’re optimised for — helps you troubleshoot more effectively, understand logs more clearly, and make informed decisions when evaluating platforms.

Let’s keep going.

Now that you’ve met the high-level runtimes, let’s take a step back and ask: Why are there multiple runtimes at all?

Isn’t one enough?

Let’s answer that.

Act 5 – Why Multiple Runtimes?

By now, you’ve met the two most common high-level runtimes: containerd and CRI-O.

They do the same kind of work — but in very different ways.

And if you’ve been wondering, “Why do we even need more than one?” — you’re not alone.

So let’s zoom out for a moment.

In any fast-moving ecosystem, different tools emerge to solve different problems.

The container world is no exception.

There isn’t a single “right” runtime because there isn’t a single type of container platform.

Some environments need speed and simplicity.

Others prioritise modularity, security, or deep Kubernetes integration.

Some teams want pluggability and ecosystem hooks.

Others want a minimal footprint and zero surprises.

This is why multiple runtimes exist.

It’s not a sign of fragmentation — it’s a sign of maturity.

Think of it like this:

- containerd is general-purpose. It’s broad, flexible, and extensible. It fits well across cloud platforms, local machines, and hybrid systems.

- CRI-O is focused. It’s designed to do one job — run containers for Kubernetes — and do it extremely well.

Each runtime is shaped by the environment it was built for.

And that’s a good thing.

This diversity lets different platforms — like AKS, GKE, k3s, and OpenShift — choose the runtime that fits their needs, without rebuilding everything from scratch.

It’s modular by design.

It’s the cloud-native mindset in action.

The more you work with containers in production, the more you’ll appreciate this.

Because when something breaks — or needs tuning — knowing why your platform chose one runtime over another gives you an edge.

You won’t just read the docs.

You’ll read between the lines.

And you’ll understand how those invisible decisions — like runtime selection — shape everything from startup time to logging, metrics, and troubleshooting paths.

That’s what architectural awareness looks like.

And you’ve just gained it.

Now let’s build on that insight — and take a closer look at the interface that holds all of this together.

Act 6 – The CRI and Why Runtime Modularity Matters

At this point, you understand what container runtimes are.

You’ve met the major players.

And you’ve seen that diversity isn’t a weakness — it’s a sign of thoughtful engineering.

Now let’s go one layer deeper.

Let’s talk about how Kubernetes connects to those runtimes in the first place — and why it doesn’t try to manage containers directly.

Kubernetes Delegates — by Design

Kubernetes is an orchestrator. Its job is to schedule containers, maintain desired state, and coordinate workloads across a cluster.

But it doesn’t run containers itself.

Instead, Kubernetes relies on a well-defined boundary called the Container Runtime Interface (CRI) — a standard contract between the Kubernetes control plane and the container runtime beneath it.

This contract defines exactly how the Kubernetes agent on each node (called kubelet) can:

- Create a container

- Start it

- Stop it

- Check its status

- Fetch its logs

…without ever knowing how the runtime does it — or even which runtime it is.

Why Is That Important?

Because it means Kubernetes doesn’t care whether the runtime is containerd, CRI-O, or something else — as long as it implements the CRI.

This separation of concerns is what keeps Kubernetes lean.

It orchestrates.

It delegates.

And it trusts that whatever runtime is on the other side of the CRI will take care of the details.

In other words, Kubernetes says:

“You handle container execution. I’ll handle everything else.”

The CRI is One of Many Interfaces

This pattern shows up in other parts of the Kubernetes ecosystem too:

- CNI (Container Network Interface) – handles how containers connect to a network

- CSI (Container Storage Interface) – handles how containers mount volumes and manage storage

- CRI (Container Runtime Interface) – handles how containers are created and managed

Each of these follows the same principle:

Don’t hardcode logic.

Define a contract.

Let others implement it.

This is what makes Kubernetes extensible.

And it’s what allows entire platforms to evolve independently — without breaking the system.

Why It Matters to You

You might never implement a container runtime.

You might never write a CNI plugin or a CSI driver.

But if you’re working in cloud-native environments, this design pattern surrounds you.

Understanding how Kubernetes delegates to container runtimes — through the CRI — gives you clarity when reading logs, troubleshooting nodes, or evaluating platforms.

It also helps you see Kubernetes for what it really is:

Not a monolith.

But a composable system, stitched together by clear contracts and interchangeable components.

And now, you’ve seen one of the most important of those connections — up close.

Let’s wrap it up.

In the final act, we’ll zoom out one last time — and reflect on what this all unlocks for developers, operators, architects, and engineers like you.

Act 7 – Reflection and Looking Ahead

Let’s take a step back and see what you now understand.

You’ve unpacked what a container runtime really is —

Not just a concept, but a real, working layer that pulls images, creates containers, allocates resources, and interfaces with the OS.

You’ve seen that this layer isn’t monolithic —

It’s split into two parts: high-level coordination and low-level execution.

You’ve met containerd, CRI-O, and runc — and now you know what each one does, and why it matters.

You’ve seen how Kubernetes doesn’t manage containers directly, but relies on the Container Runtime Interface to hand that job off.

That interface — and the separation it enforces — is what makes Kubernetes modular, scalable, and adaptable across environments.

And that’s the key takeaway here:

Container runtimes aren’t an edge concern.

They’re a foundational part of every containerized platform — from your laptop to production clusters.

You now understand how this part of the system works — not just in theory, but in practice.

Which means you can make better decisions, ask better questions, and troubleshoot with more confidence — no matter which platform you’re working on.

Up Next

In the next article, we’ll build on this runtime foundation — and zoom out to the full Kubernetes execution model.

You’ll see how pods, containers, and runtimes all tie together inside the Kubernetes control loop.

You’ll understand how the scheduler, the kubelet, and the runtime interact — and what really happens when you deploy an app to a cluster.

You’re ready for it now — and everything that follows.

Let’s keep going.